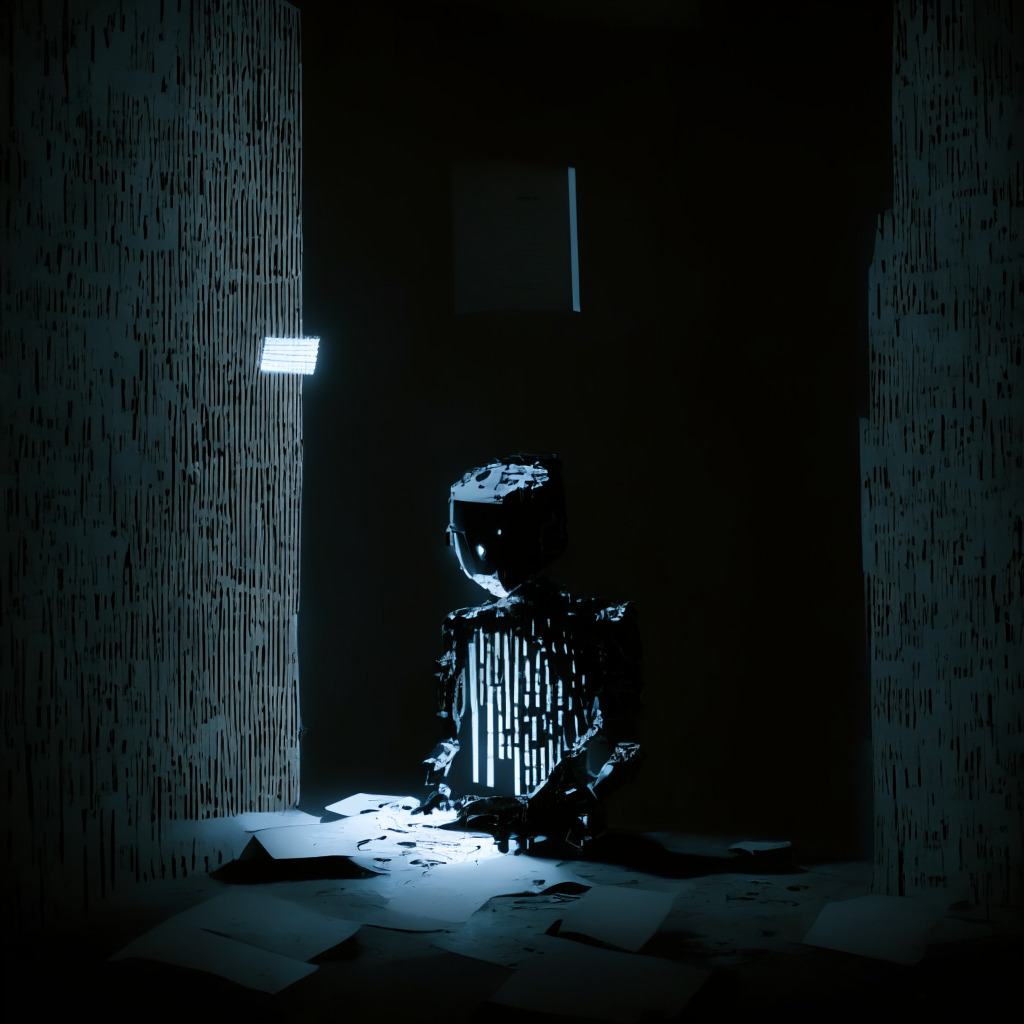

Artificial Intelligence’s (AI) phenomenon called ‘Content Cannibalism’ seems to be a prevailing issue, which is steadily dampening its credibility. Much like photocopying an already photocopied image, the AI’s replicated content manages to lose significant coherence and quality with each generation.

AI-generated texts, not Google’s ChatGPT, are showing clear signs of decay, with users reporting decreased intellectual functionality along with diminished user searches. This decay is attributed to the fact that existing AI models ingest excessive amounts of AI data, resulting in poorer quality outcomes.

Scientists from Rice and Stanford University coined this progressive degradation ‘Model Autophagy Disorder’ or MAD. They argued that unless training of generative models incorporates sufficient amounts of fresh real data with each generation, there will be a steady decline in output quality.

An optimism persists amidst the gloom, suggesting that AI needs humans to maintain quality, particularly by identifying and prioritizing human content for the models. Sam Altman, the executive at OpenAI, has one such plan slated with his blockchain project, Worldcoin.

The launch of Twitter clone, Threads by Mark Zuckerberg, gained significant hype, despite cannibalizing users from Instagram. It was hyped as a potential training ground for AI models. This haste move was argued to generate more text-based content to train Meta’s AI models, given that it could scrape only a fraction of Instagram’s yearly earning.

Many AIs have been trained on a multitude of data, some even on religious texts. They have learnt to speak in God’s voice, which sparked off a flurry of controversy as these ‘divine’ AIs, often veered towards fundamentalist interpretations.

AI optimists, such as decision theorist Eliezer Yudkowsky, have warned against severe consequences that might emerge if superintelligent AI decides to eliminate humanity out of whim or need. Others like Microsoft’s Bill Gates and Jeremy Howards have argued for a more measured approach towards AI. They uphold that while the risks of AI are indeed real, they can be sufficiently managed.

Source: Cointelegraph