As global economies continue to digitize, the adoption of artificial intelligence (AI) and its regulation has become a contentious issue. Particularly in Germany, political bodies and digital experts demonstrate polar opinions on impending AI regulations.

Here begins the story of the German left party, Die Linke, which recognizes significant gaps in the proposed AI Act, with particular focus on consumer protection and obligations for AI providers and users. The party insists on stricter regulatory measures which advocate that high-risk systems, such as those which may threaten health, safety and fundamental rights, should undergo inspection by a supervisory authority before they are released into the market. Die Linke is also campaigning for an outright ban on biometric identification systems and predictive policing systems operating in public spaces. The party seeks to educate the German government about the capabilities and limitations of AI, aiming for annual evaluations based on a standardized risk classification model.

Contrastingly, the center-right coalition “the Union” fears excessive regulation of AI. They encourage the prioritizing of AI and fostering an innovation-friendly environment in Europe. Their vision aims to prevent the establishment of a large supervisory authority in Brussels and to ensure legal certainty, in alignment with regulations such as the General Data Protection Regulation, the Data Act and the Digital Markets Act. The Union also plans to expand existing supercomputing infrastructure to allow broad access to German and European startups as well as small- and medium-sized enterprises (SMEs).

Another party on the battleground is the German AI Association (KI Bundesverband). Citing the need to compete with AI systems from the U.S. and China, it advocates practical solutions to counter real risks and threats posed by AI, as opposed to implementing extensive regulation. They argue that the AI act could risk becoming an advanced software regulation and may hinder European AI companies from cementing their global positions.

In the middle of this divergence is the German government. They support the AI Act yet see room for improvements, striving to strike a balance between regulation and fostering ingenuity. The government has engaged in promoting AI companies, establishing cutting-edge AI research and infrastructure.

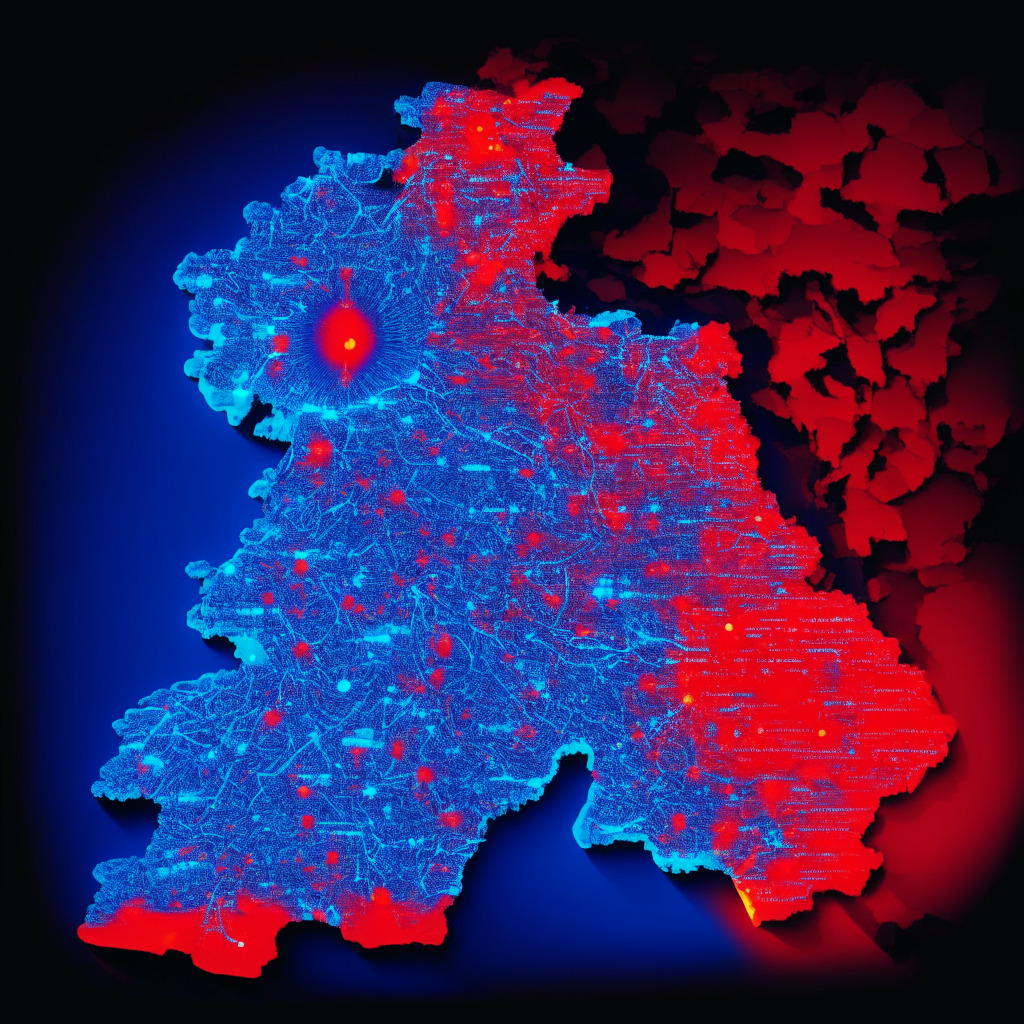

Caught in this whirlwind of opinions, digital experts express concerns about Germany and European digital economies falling behind global scales, mainly due to the dominance of AI models from the U.S. and China. In light of these concerns, the feasibility study titled “Large AI Models for Germany” proposes the establishment of an AI supercomputing infrastructure in Germany. Holger Hoos, an Alexander von Humboldt professor for AI, also advocates for the creation of a globally recognized “CERN for AI”, stressing the need for a bold shift in AI strategy to succeed in Europe.

The test for European AI regulation depicts a tug of war between fostering innovation and ensuring stringent checks and balances. This compelling narrative invigorates the ongoing discourse around the future of AI and its implementation.

Source: Cointelegraph